How to convince your lead that quad trees are awesome? Make a bulky geo lookup 100x faster

Date Published

I don't care much about motivational speeches! So this isn't one. I care about return on experience (a spin on ROI if you wish). I care about sharing! And I care about showing off a pretty cool thing I did. Though it might not be very impressive, it is to me :D

This article may as well be named "How to 100x a bulk geographic lookup." Or perhaps "How to speed [it] up from twenty to two minutes." In one of my projects, we need to keep up with hundreds of high priority targets moving around the globe and being tracked relatively regularly via GPS trackers. All that, in order to track where they go, and more importantly: are they in particular user-defined areas?

Fair warning! This is going to go into mathematical details. After all, I had to prove that solution was, in fact, faster than the current implementation. However, note that I am in no way good at math!! In fact, I had pretty bad grades. But, apparently, I was good enough to convince my seniors! Do feel free to point out if you see issues :D

Initial implementation

A very simple and straightforward way you would implement it would be to take the list of target positions you need to check, take the list of areas and the coordinates that make them up, and match them together. We end up with a complexity of , where is the number of positions to process, and the number of areas to match them with. At least, it's not quadratic... sure. Now, this doesn't sound too bad, does it? It makes sense that the complexity would scale like that. After all, those are the two main factors of the entire operation.

You would be right. But, how ever you look at it, it poorly scales as gets larger. By our measurements, matching one position against all areas was taking around three to four seconds. If you have one hundred targets, then the entire operation would take around six minutes. This already restricts you from real-time or minute-by-minute monitoring. Sure, this might not be your usecase anyway. Perhaps you need it every half hour, or every hour. Let's say that your business expand, and you start having to monitor upwards to two, three, or four hundred, you soon reach a point where the next batch arrives before you finished processing the previous one.

Sure, you could increase the specs of your machine and whatnot, or parallelise the operations. But there is a "simple" and quite elegant solution to all these issues!

The Solution

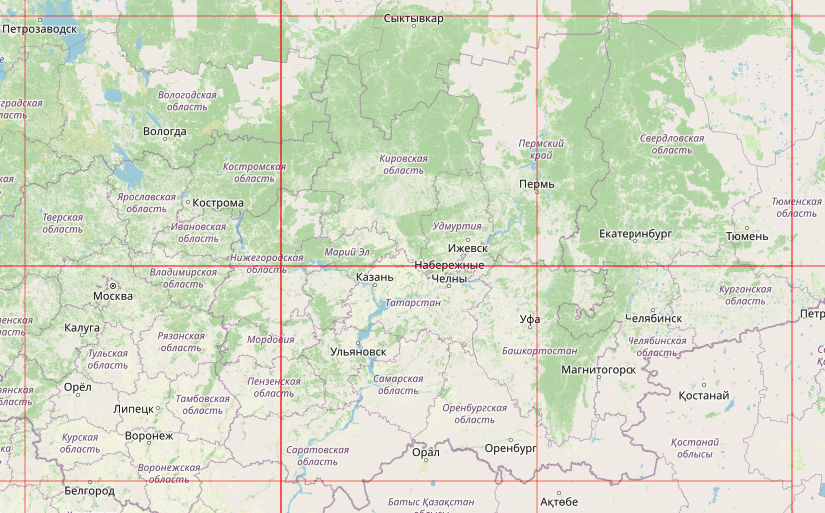

Looking at how our problem scales, the only way we could lower the load and improve the performance would be to lower , the number of positions to process, or , the number of areas to match. Now, we can't lower . After all, we need to process every position! And you might think we can't lower either, but it's not entirely infeasible. A lot of the time spent matching a position is spent on areas that would have no chance at all to even match. Even if a position is in Russia, we still spend precious time looking into areas focused in the UK or France! This is a huge waste of CPU resources!

You could think that if we knew if an area has a chance to match with a position, or none at all, then this would lead to the same scalability issues. This would be true if we were to think to terms of absolute certainty. In our first implementation, we are making sure that there is no area that wasn't properly matched in order to rule them out. What if there was a way to trustworthily rule out a swath of areas with minimal effort?

This is where quad trees come into play.

What's a quadtree?

The first time I encountered quadtrees was during a Code Game Jam, where I had to develop a game with a team in 72 hours. That was where I initially saw them used in practice. At the time, they felt almost overengineered for what I needed.

Turns out, they were not.

A quad tree is a tree data structure used in computer science, particularly in computer graphics, geographic information systems (GIS), and image processing. It is a hierarchical tree structure in which each node has four children, hence the name. The primary purpose of a quad tree is to partition a two-dimensional space into smaller regions (quadrants) to efficiently organise and search data. This hierarchical subdivision allows for efficient spatial indexing and retrieval of information. Quad trees are commonly used in applications where spatial data needs to be efficiently organised and queried, such as in image compression, collision detection, and spatial databases.

The basic idea is to recursively subdivide a space into four quadrants until a certain condition is met. Each node in the quad tree represents a rectangular region, and it either contains data or further subdivides its region into four smaller quadrants. There are different types of quad trees, including:

- Point: Each node represents a point in space, and the subdivision is based on the spatial location of points.

- Region: Each node represents a region or area of space, and the subdivision is based on the spatial extent of these regions.

- Hybrid: A combination of point and region quadtree, where nodes can represent either points or regions.

Quad trees provide an efficient way to perform spatial queries, such as range searches, nearest neighbour searches, and intersection tests, by exploiting the hierarchical structure and organising data based on spatial proximity. For our use, we need a Region quad tree (subsequently referred to as tree) where each Region (also referred to as a node) will contain a list of the areas that are (entirely or partially) within it.

Before processing the positions, we build said tree. We start at the root node, which is the entire map that contains all areas. When instantiated, this node will subsequently split itself into four quadrants and compute which areas intersect with each quadrant. Each of them becomes a node and the process repeats itself, each quadrant splitting itself into more quadrants and so on and so forth until a maximum deepness threshold (noted d) is reached.

The number of nodes at a specific deepness level with is . The total number of nodes in the entire tree is which is, using a geometric series formula, simplified to . Thus, the complexity of building such a tree is .

Disclaimer: This formula does not compensate for the fact that with each branching, we decrease the number of areas since we are splitting them into four quadrants.

Note: The higher is, the lower at a specific location will be. However, passed a certain threshold, we will reach , the minimum that can reach at a given coordinate. This threshold is unknown and depends on the areas spatial distribution and many other factors, however, we postulated that is a sufficient value past which no relevant improvements were observed with production data. Therefore, subsequent mentions of will be considered as the range constant .

How do we use it?

Once built, we can query the areas at a given coordinate by giving the root node a coordinate. The root node will then determine which of its quadrants contains the coordinate and ask it recursively until we reach a leaf node (a node without subnodes). When we reach the leaf node, we retrieve its list of areas and return it recursively giving us which areas are intersecting with the region of the leaf node.

As you can see, this doesn’t give us EXACTLY what we want, which is the position’s areas. It only gives us the areas in the surroundings of the position we are processing. But that is still quite useful for us, since we are precisely trying to minimize the number of areas we need to process.

As we said previously, to decrease the processing time, we need to minimize . We minimized by giving us only those who are intersecting, partially if not exactly, the position. And reading the tree costs only (simplified by equivalence to ) which is to say that for each layer of the tree, we check 4 quadrants until we reach the leaf and return.

Thus, instead of needing to check all areas in , we only need to check a subset where , and the number of areas inside the leaf node where the length of with .

Is it actually better?

As long as the cost of a) building the tree, b) reading it, and c) checking collisions on is lower than the cost of checking collisions on , then it will stay a better solution. In mathematical expression, our quadtree will be better if:

While this might look like the quadtree implementation is worse than the current implementation, we can observe the following points:

- The first term is not dependent on the number of positions we need to process. Therefore, it will stay constant as increases.

- The second term is not dependent on the number of areas we need to process. Therefore, it will stay constant as increases.

- Also note that, as previously stated, is a constant equal or lower than five, meaning that we could safely reduce: . Which can be then integrated with the third term:

Which gives us this simplified expression:

Let’s name the first term of the inequality as NEW, and the right one () as OLD. Keeping in mind that if , this means that the new method costs more and thus is less performant.

Note: In practice and based on production data, in a 5-deep leaf node, the average number of areas is 6. Meaning that on average which would help reduce further to However, to keep a generalistic view of this problem, we will keep the unreduced version.

We know already that , this means that will always be true and thus lets us know that querying the tree will never be worse than the current implementation. However, the tree building term () poses an issue.

This expression demarks itself from as it scales exponentially with d which shouldn’t be higher than 5. This means that in practice, . In addition, we also need to take into consideration the term that scales with which itself depends on the spatial distribution of . Taking the median number of areas per leaf node that we will note , the closer comes to , the less spatially distributed is. Noting that we can measure the spatial distribution of by how close is to .

More formally: , and thus that .

Thus, for to be true, we need:

Note that we need to switch the sign of the inequality at the last step since if , then meaning that it will be negative. In conclusion, the higher is, the larger needs to be for . In other words:

In natural language: The (more concentrated)/(less spread out) the areas are, the more coordinates we need to process for the quadtree to be performant. Which makes sense, since the tree is built on the assumption that we can minimize the number of areas by removing those that are far away from a given position. But if all areas are all in the same spot, then the minimization optimization of the tree will do nothing there, though it will reduce the position processing load anywhere else to null.

Conclusion

As previously stated, in our case (on average) and let's say we have 108 user-defined areas to match, so . This gives us:

This indicates that for the quadtree to be more performant than the current implementation with a deepness of 5, a total of 108 areas to check, and an average number of area per leaf node of 6, we need at least ~4 coordinates to process. Less than that and the quadtree solution becomes slower.

In other words, building the tree takes around the same time as it would take to process four coordinates using the initial implementation.

Knowing in our case, we have hundreds of them, this requirement is easily fulfilled. In fact, we have thousands of them which further emphasizes the point.

What an adventure, don't you think? Math is quite useful sometimes.And funnily enough, a bit of knowledge from game development turned out to be exactly what this unrelated geo problem needed.

Concepts travel well. Sometimes, what you learn for one domain ends up solving another.